AI, as of writing this in November 2024, will reliably get you ~75% of the way to a job well done.

We might quibble over the exact percent (60%? 30%? 80%?), but we can all agree that it’s extraordinary. A machine can now rival a human intern.

So for arguments sake, let’s agree that human intern = 75% = average AI output. Let’s also agree that the bar for most passable work is set at 80%. With AI’s help, you’re no longer starting from scratch, but finessing 75% to 80%.

This is nothing short of miraculous, and it’s where the current hype and conversation around AI has focused. We’re so transfixed by the speed and wonder of these gains that we lose perspective:

The output is still intern work.

To make use of an intern, you need the skill and discernment to consistently generate 80% output yourself.

Imagine giving a highly competent intern to someone who has no idea what they’re doing. You end up with a very confused intern…and someone who still has no idea what they’re doing.

That’s because interns get tactics right, but lack context. They might get a lot done, but they don’t know how their work fits into the bigger picture. They’re ill-equipped to assess whether or not they should have done the work at all.

It’s also important to remember that to be someone who can make use of a competent intern is much harder than becoming a competent intern.

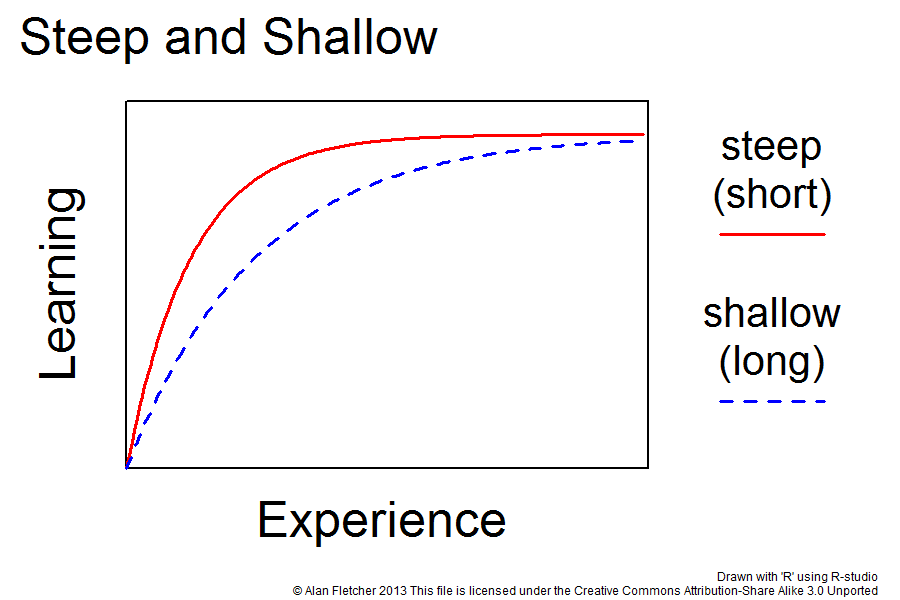

It might take months to master intern-level skills, but to be a good employee can years. To be a seasoned senior? A true master of trade? This kind of mastery can take decades, if it happens at all.

This is absolutely not to say that 75% work is worthless. Half-baked ideas, prototypes, drafts are a prerequisite to innovation. And, many times, half-baked work is better than no work at all.

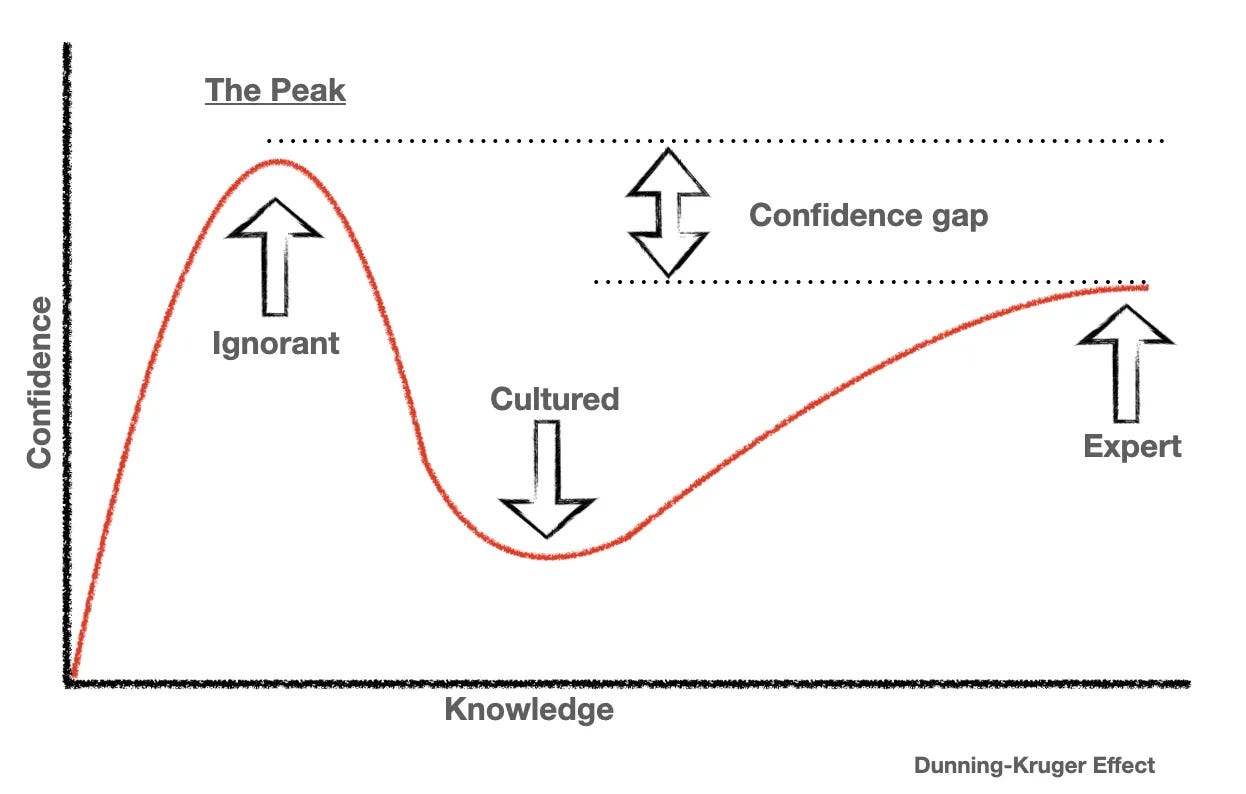

The problem arises when you lack the discernment to differentiate between half-baked output from highly skilled output.

When you’re first starting out as an intern, you require a lot of active correction. You lack the capability to take your 75% output and finesse it further because you don’t know what you don’t know.

But that’s just today, many say, the rate of growth of AI is astronomical. Things like compounding reasoning and improved models/compute will surely help us overcome these hurdles!

Theoretically, this checks out. But practically, these hurdles become exponentially harder to overcome the further up the skill chain you move:

Data availability: by definition, highly competent work is rare. It’s not a linear scale, but an exponential one - the higher the quality, the more exceptionally rare it becomes.

Data complexity: Not only does the data itself get rarer, it also becomes proportionally more complex. Mastery is not just about how you do things, but the ease in which you can comprehend and adapt to different circumstances. The models required to process this higher order data are that much more nuanced and intricate.

Evaluations: You then need the competency to train and fine tune outputs from these models. You need a true subject matter expert to conduct evaluations to ensure that outputs are consistently reliable.

So the barriers for AI to truly gain competence in higher orders of work are very real. But…no one knows how quickly this is all moving. Say…we come out with an AI superbrain tomorrow.

Even then, one very human, immutable barrier still remains.

If you have neither taste, discernment, or skill, you won’t be able to use any kind of AI tooling to its full effect.

We’re already seeing this play out today. When you give a highly competent engineer AI, they enjoy extraordinary productivity gains. It cuts out the most boring and repetitive parts of their work. When things go wrong, they can quickly identify hallucinations and troubleshoot reasoning gaps. Their instincts and expertise act as guardrails so the AI doesn’t tailspin by building mindlessly and haphazardly.

Hand the same tools to a green engineer, and they often spend more time trying to understand what the AI is doing than actual engineering itself. They are literally not skilled enough to comprehend the output, because they couldn’t generate the output on their own. Without the guardrail of expertise, they create massive quagmires that they have no way of fixing…or even understanding.

When we talk about AI gains today, we are almost exclusively talking about the productivity gains that are experienced by seasoned experts.

We rarely hear the perspective of junior, inexperienced users. After all, newbies who try these AI tools and find themselves way out of their depth aren’t exactly rushing to publish their stories on LinkedIn.

This means that our conversations re: AI today largely take expertise for granted. Magically, we expect to always have a population of skilled experts to make use of these tools and further these productivity gains.

There are many who hyperfocus on these early wins to justify human replacement. AI companies rush to sell cost savings and headcount reduction, shilling intern-level output as viable replacements for seasoned talent. They are met by boards who are over-eager to believe these convenient stories and cleave back some EBITDA.

It’s driven by greed, not true innovation. When Sundar Pichai announced that 25% of Google’s code was now written by AI, it’s stock shot up by 8% overnight. Any senior engineer who has tried any kind of AI code gen would call bullshit, because it is. But it behoves Google to push this narrative - it sells Gemini to B2B buyers and it sells the narrative of human replacement (AKA: higher future gross margins) to their shareholders.

This is the kind of rhetoric that has cast a shadow of fear and anxiety over the conversation around AI.

The one-man unicorn is here! Upskill and adapt or be left to wither away and die like the dinosaur you are! Do more!

Designers are being encouraged to “engineer” by using AI for code gen. Product managers are pushed to “design” by using AI to make wireframes. Developers “product manage” by using AI to generate PRDs.

There are many people who do this from a genuine place of growth and learning, but for most, the vibe is all wrong. They’re not so much learning, but frantically doing more, more, more out of anxious desperation.

Case in point, there’s tons of ‘thought leadership’ today that purports to turn you into an AI-powered super machine. But…you’ll find very little content that addresses the elephant in the room - what happens to the generation just entering the workforce?

The pandemic hobbled their personal and professional growth, and now, in the anxious rush of more, we’ve left the future supply of skilled AI users in the lurch.

If we all just paused to think, it would become clear that “do more” is the worst possible advice. In the age of AI, raw output will become a throwaway commodity. What matters will be expertise, taste, and discernment.

AI shouldn’t make us feel fear and anxiety. In fact, the trillions of dollars spent trying to replicate even the most basic functions of the human mind should make us value our human brains, experiences and intuition all the more.

More so, it should make us respect other human brains, experiences, and intuition all the more.

The one-man unicorn is a toxic holy grail. Not only is it rooted in fear and hubris, but it ignores the immutable fact that human beings just don’t function well as islands.

Imagine: a single-subject expert who leverages AI to generate half-baked strategy, half-baked product research, half-baked design, and half-baked code. They aren’t smart enough to realize that what they’ve done is half-baked. They inherently disrespect all disciplines that aren’t their own. In the pursuit of staying relevant, they forgo connection, mastery, and collaboration.

How misguided. How fucking sad.

Not only is the vibe off the rails, the entire effort is futile. Despite their best efforts, mean, myopic minds produce mean, myopic results. Fear strangles creativity, precludes dreaming, and makes the world smaller.

Don’t be someone who looks at paradigm-breaking technology like AI and sees only cheaper, faster, and more of the same.

You won’t stand a chance against teams of good humans, working humbly, collaboratively, and leveraging AI with finesse.

They won’t be heads down building faster horse-drawn carriages, but wondrous new crafts to places unknown.

Christine Miao is the creator of technical accounting–the practice of tracking engineering maintenance, resourcing, and architecture.

There are plenty of tools that track the byproducts of engineering–features, deployment velocity, and roadmap–metrics where naïve AI usage will overperform.

Technical accounting surfaces the work of engineering itself, where the carrying costs of agent usage will show through. That includes the very hard, often thankless, but existential work of bringing up new talent.

A huge thank you to Lori Mazor, Matt Casey, April Kim, and Marty Betz for lending their perspective and reading prior drafts of this article.

“The one-man unicorn is a toxic holy grail. Not only is it rooted in fear and hubris, but it ignores the immutable fact that human beings just don’t function well as islands.

Imagine: a single-subject expert who leverages AI to generate half-baked strategy, half-baked product research, half-baked design, and half-baked code. They aren’t smart enough to realize that what they’ve done is half-baked. They inherently disrespect all disciplines that aren’t their own. In the pursuit of staying relevant, they forgo connection, mastery, and collaboration. “

Wow I really like this. I vibe code for fun but I couldn’t imagine trying to support a unicorn company as one person.